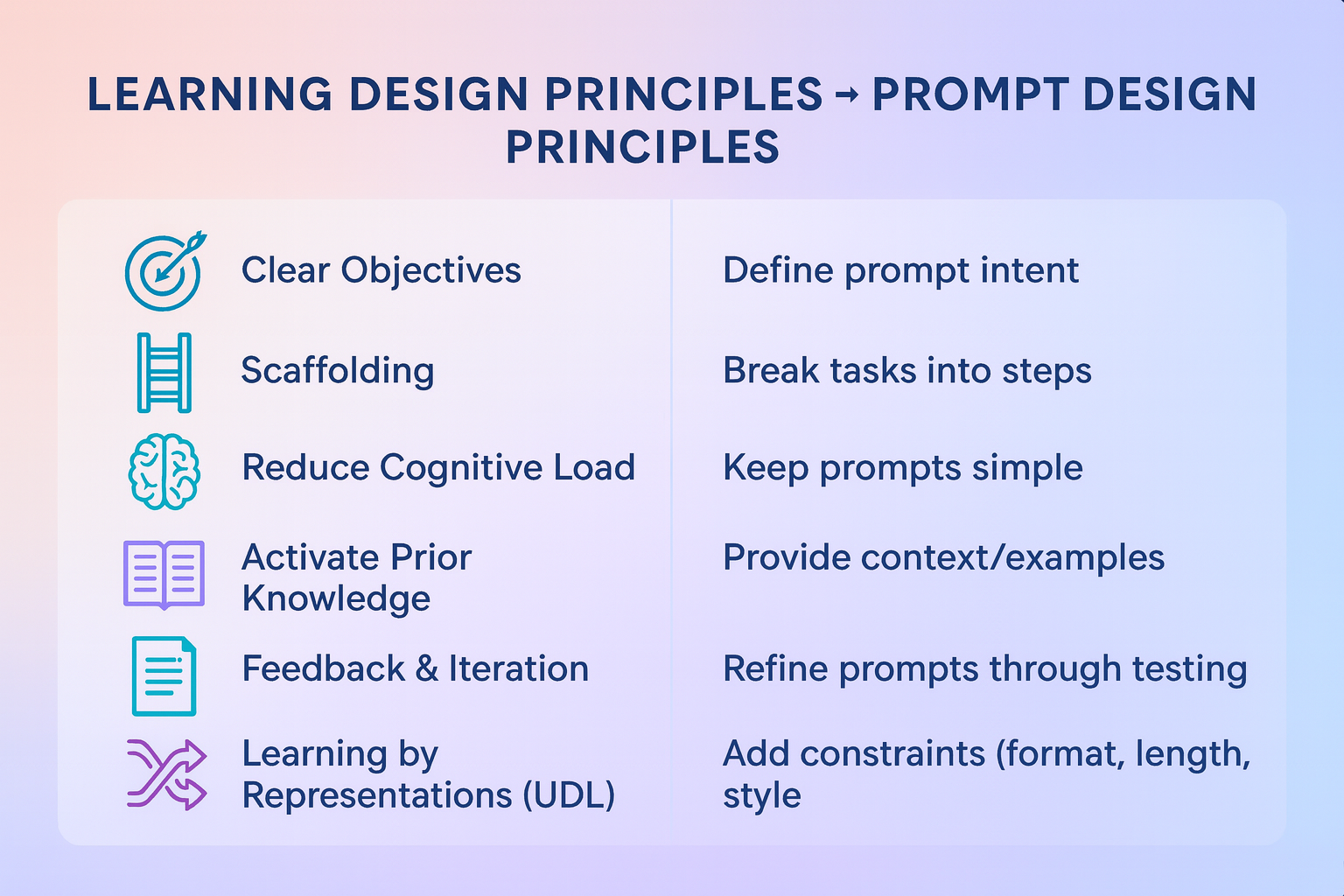

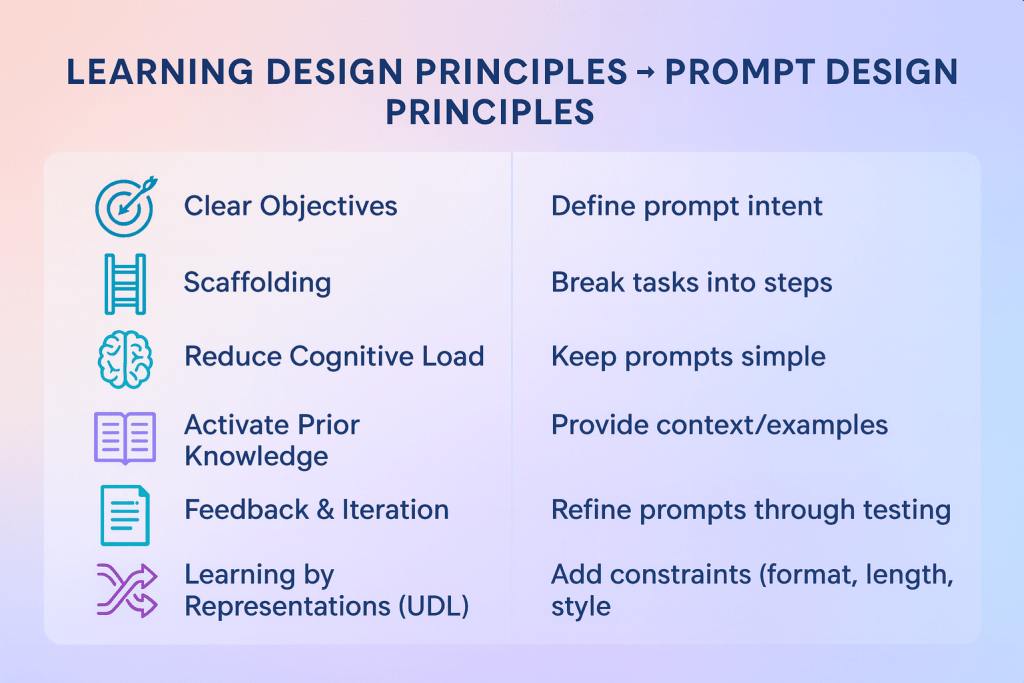

As a learning designer, I’ve worked with principles that help people absorb knowledge more effectively. In the past few years, as I’ve experimented with GenAI prompting in many ways, I’ve noticed that many of those same principles transfer surprisingly well.

I mapped a few side by side, and the parallels are striking. For example, just as we scaffold learning for students, we can scaffold prompts for AI.

Here’s a snapshot of the framework:

The parallels are striking:

- Clear objectives → Define prompt intent

- Scaffolding → Break tasks into steps

- Reduce cognitive load → Keep prompts simple

- And more…

Instructional design and prompt design share more than I expected.

Which of these parallels resonates most with your work?