If you’ve used ChatGPT or Copilot and received an answer that sounded confident but was completely wrong, you’ve experienced a hallucination. These misleading outputs are a known challenge in generative AI—and while some causes are technical, others are surprisingly content-driven.

As a content creator, you might think hallucinations are out of your hands. But here’s the truth: you have more influence than you realize.

Let’s break it down.

The Three Types of Hallucinations (And Where You Fit In)

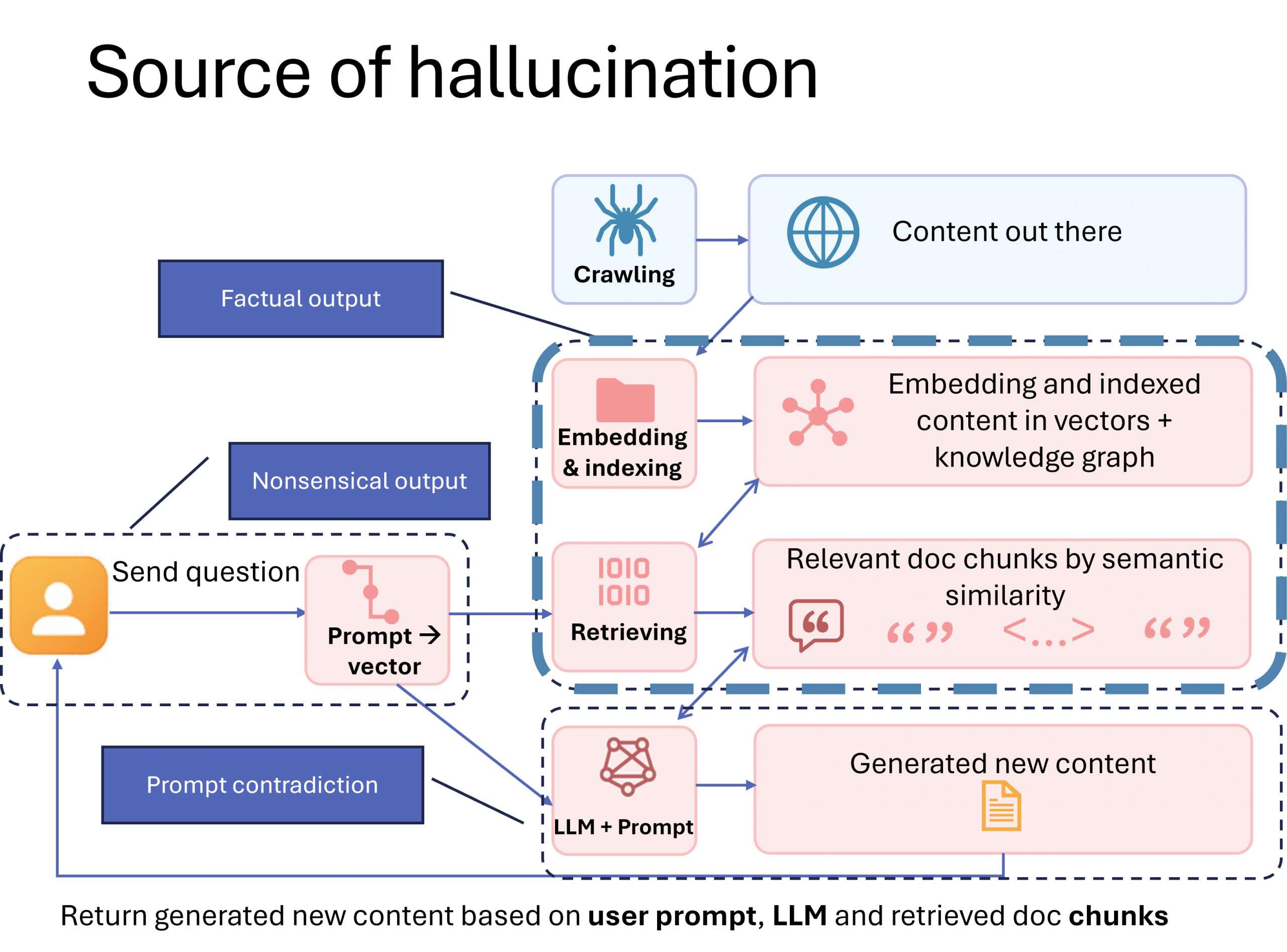

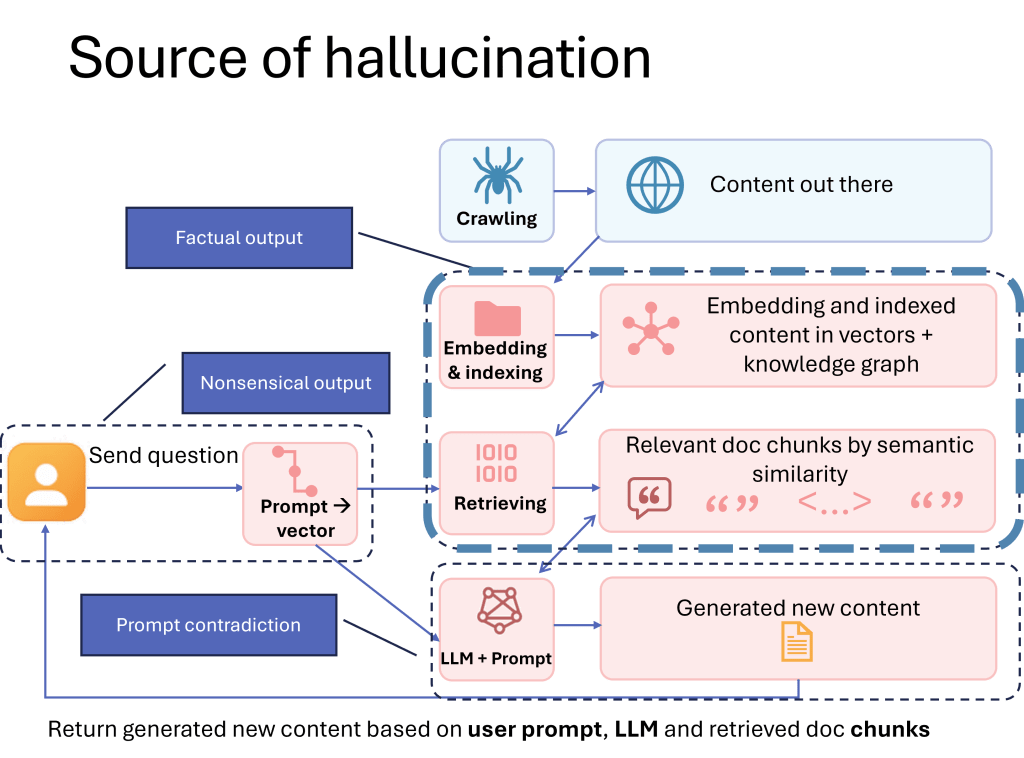

Generative AI hallucinations typically fall into three practical categories. (Note: Academic research classifies these as “intrinsic” hallucinations that contradict the source/prompt, or “extrinsic” hallucinations that add unverifiable information. Our framework translates these concepts into actionable categories for content creators.)

- Nonsensical Output

The AI produces content that’s vague, incoherent, or just doesn’t make sense.

Cause: Poorly written or ambiguous prompts.

Your Role: Help users write better prompts by providing examples, templates, or guidance. - Factual Contradiction

The AI gives answers that are clear and confident—but wrong, outdated, or misleading.

Cause: The AI can’t find accurate or relevant information to base its response on.

Your Role: Create high-quality, domain-specific content that’s easy for AI to find and understand. - Prompt Contradiction

The AI’s response contradicts the user’s prompt, often due to internal safety filters or misalignment.

Cause: Model-level restrictions or misinterpretation.

Your Role: Limited—this is mostly a model design issue.

Where Does AI Get Its Information?

Where Does AI Get Its Information?

Modern AI systems increasingly use RAG (Retrieval-Augmented Generation) to ground their responses in real data. Instead of relying solely on training data, they actively search for and retrieve relevant content before generating answers. Learn more about how AI discovers and synthesizes content.

Depending on the system, AI pulls data from:

- Internal Knowledge Bases (e.g., enterprise documentation)

- The Public Web (e.g., websites, blogs, forums)

- Hybrid Systems (a mix of both)

If your content is published online, it becomes part of the “source of truth” that AI systems rely on. That means your work directly affects whether AI gives accurate answers—or hallucinates.

The Discovery–Accuracy Loop

Here’s how it works:

- If AI can’t find relevant content → it guesses based on general training data.

- If AI finds partial content → it fills in the gaps with assumptions.

- If AI finds complete and relevant content → it delivers accurate answers.

So what does this mean for you?

Your Real Impact as a Content Creator

You can’t control how AI is trained, but you can control two critical things:

- The quality of content available for retrieval

- The likelihood that your content gets discovered and indexed

And here’s the key insight:

This is where content creators have the greatest impact—by ensuring that content is not only high-quality and domain-specific, but also structured into discoverable chunks that AI systems can retrieve and interpret accurately.

Think of it like this: if your content is buried in long paragraphs, lacks clear headings, or isn’t tagged properly, AI might miss it—or misinterpret it. But if it’s chunked into clear, well-labeled sections, it’s far more likely to be picked up and used correctly. This shift from keywords to chunks is fundamental to how AI indexing differs from traditional search.

Actionable Tips for AI-Optimized Content

Structure for Chunking

- Use clear, descriptive headings that summarize the content below them

- Write headings as questions when possible (“How does X work?” instead of “X Overview”)

- Keep paragraphs focused on single concepts (3–5 sentences max)

- Create semantic sections that can stand alone as complete thoughts

- Include Q&A pairs for common queries—this mirrors how users interact with AI

- Use bullet points and numbered lists to break down complex information

Improve Discoverability

- Front-load key information in each section—AI often prioritizes early content

- Define technical terms clearly within your content, not just in glossaries

- Include contextual metadata through schema markup and structured data

- Write descriptive alt text for images and diagrams

Enhance Accuracy

- Date your content clearly, especially for time-sensitive information

- Link related concepts within your content to provide context

- Be explicit about scope —what your content covers and what it doesn’t

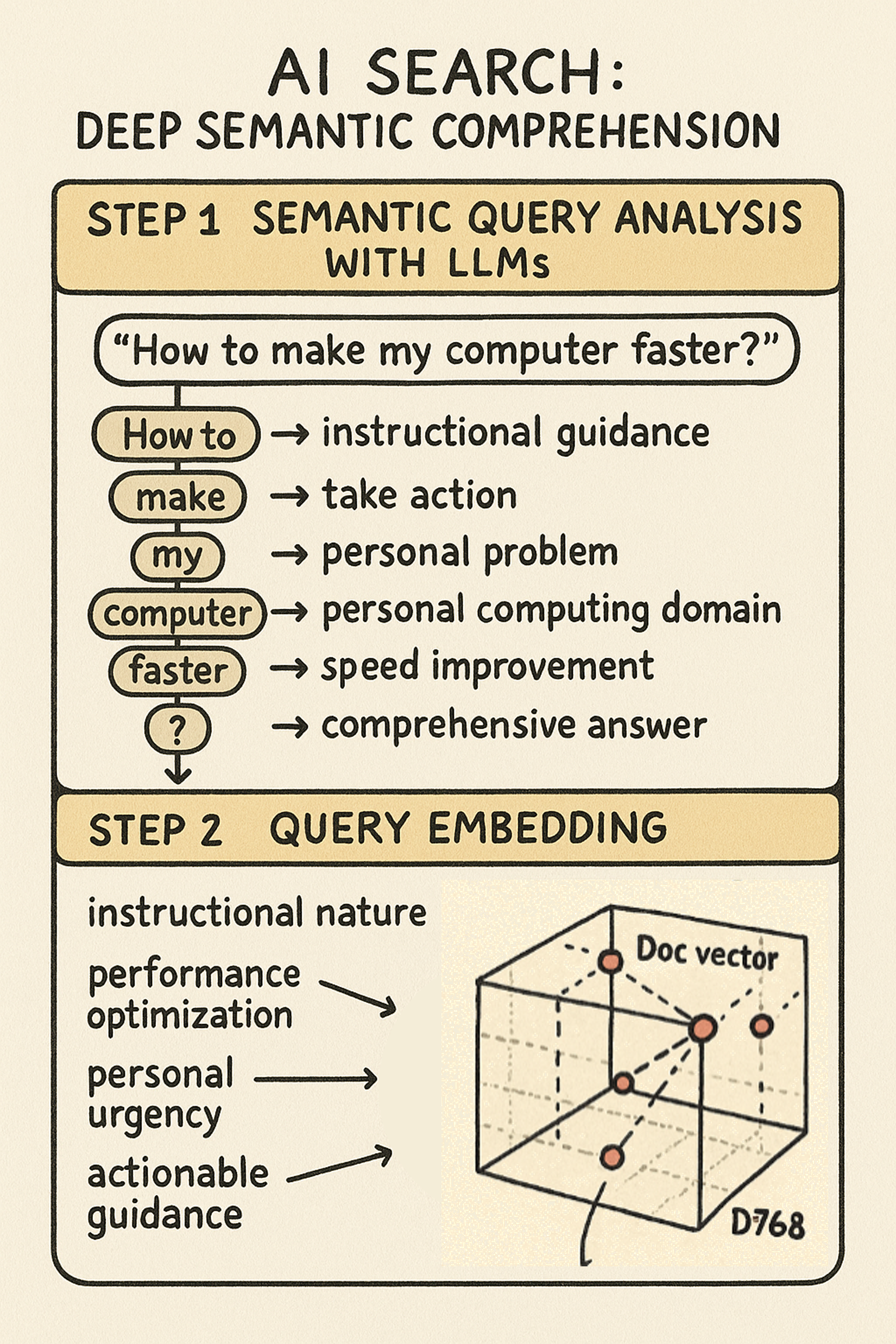

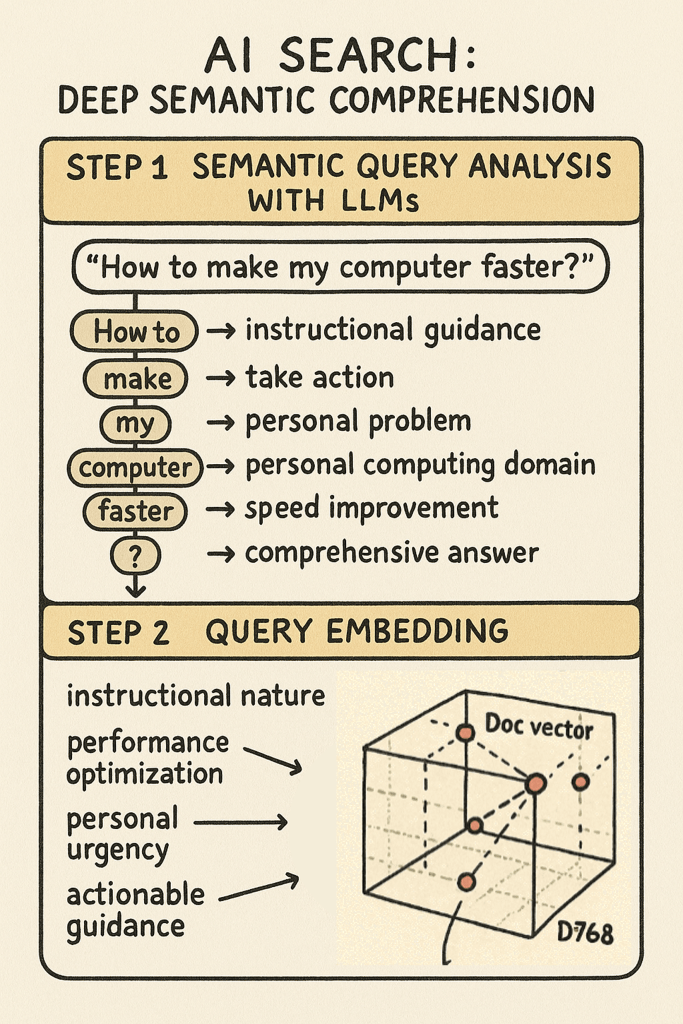

Understand Intent Alignment

AI systems are evolving to focus more on intent than just keyword matching. That means your content should address the “why” behind user queries—not just the “what.”

Think about the deeper purpose behind a search. Are users trying to solve a problem? Make a decision? Learn a concept? Your content should reflect that.

The Bottom Line

As AI continues to evolve from retrieval to generative systems, your role as a content creator becomes more critical—not less. By structuring your content for AI discoverability and comprehension, you’re not just improving search rankings; you’re actively reducing the likelihood that AI will hallucinate when answering questions in your domain.

So the next time you create or update content, ask yourself:

“Can an AI system easily find, understand, and accurately use this information?”

If the answer is yes, you’re part of the solution.