For documentation writers managing large sets of content—enterprise knowledge bases, multi-product help portals, or internal wikis—the challenge goes beyond polishing individual sentences. You need to:

- Keep a consistent voice and style across hundreds of articles.

- Spot duplicate or overlapping topics

- Maintain accurate metadata and links

- Gain insights into content gaps and structure

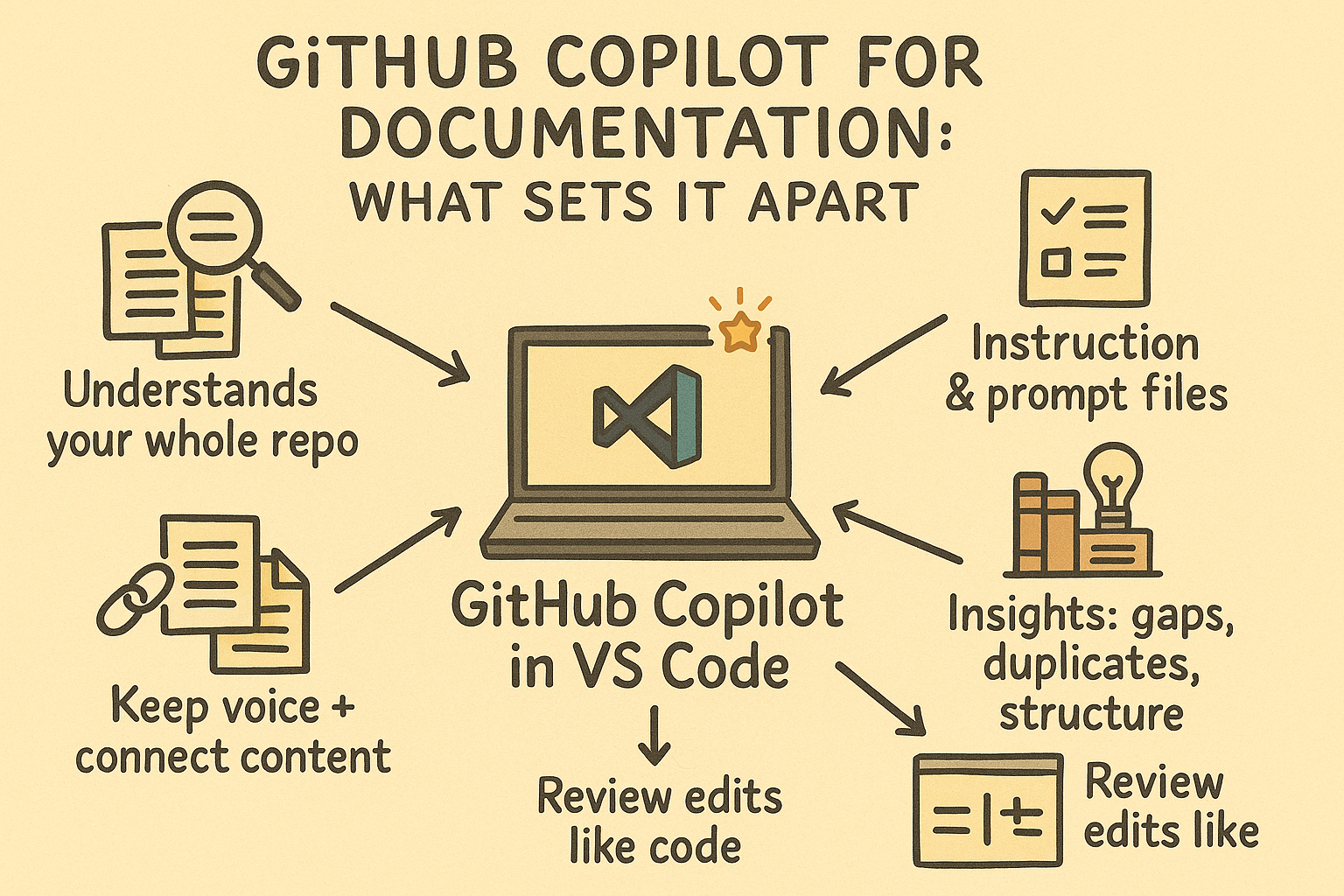

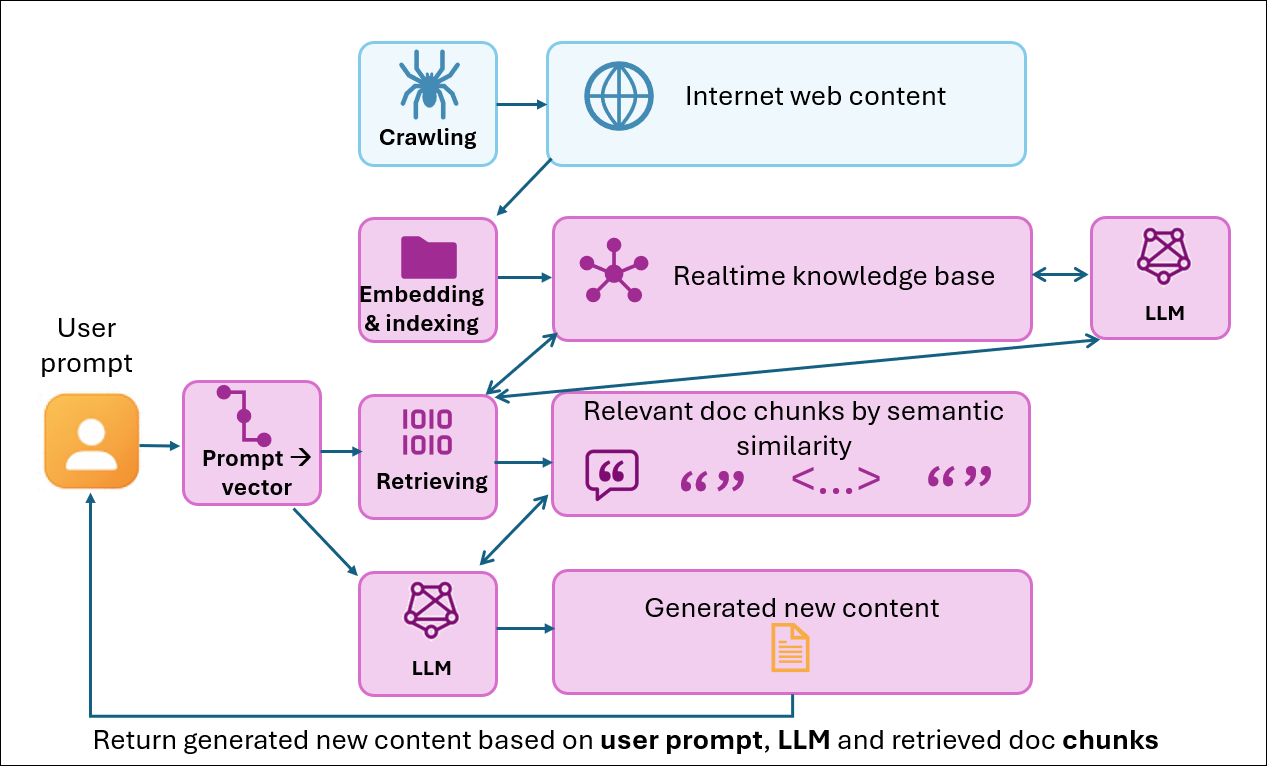

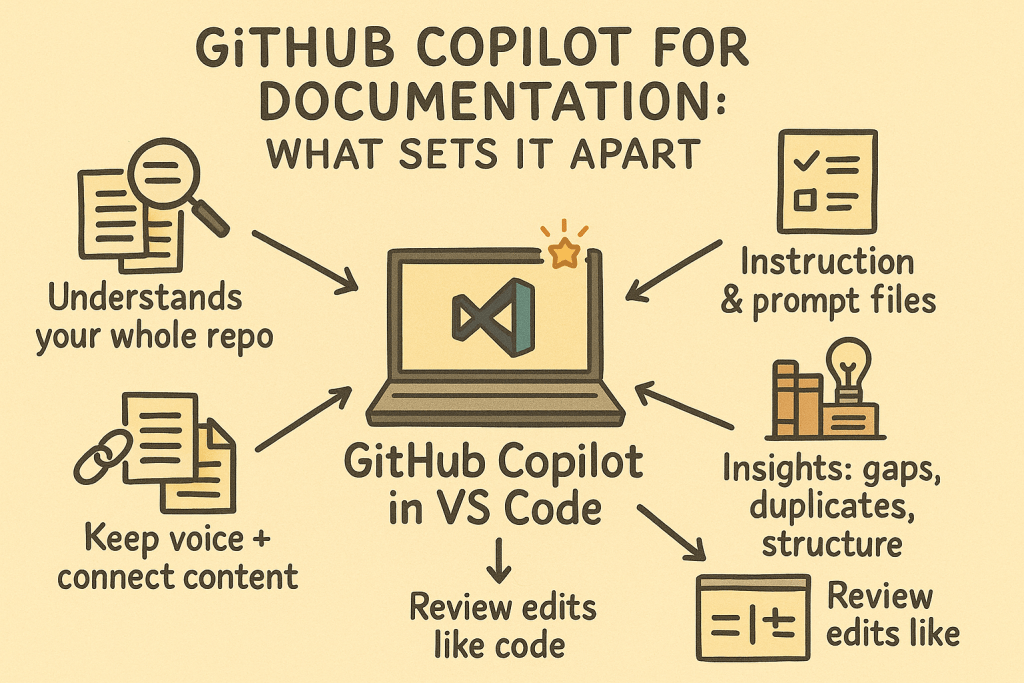

This is where GitHub Copilot inside Visual Studio Code stands out. Unlike generic Gen-AI chatbots, Copilot has visibility across your entire content set, not just the file you’re editing. With carefully crafted prompts and instructions, that means you can ask it to:

- Highlight potential gaps, redundancies, or structural issues.

- Suggest rewrites that preserve consistency across articles.

- Surface related content to link or cross-reference.

In other words, Copilot isn’t just a text improver—it’s a content intelligence partner for documentation at scale. And if you’re already working in VS Code, it integrates directly into your workflow without requiring a new toolset.

What Can GitHub Copilot Do for Your Documentation

Once installed, GitHub Copilot can work directly on your .md, .html, .xml, or .yml files. Here’s how it helps across both single documents and large collections:

Refine Specific Text Blocks

Highlight a section and ask Copilot to improve the writing. This makes it easy to sharpen clarity and tone in targeted areas.

Suggest Edits Across the Entire Article

Use Copilot Chat to get suggestions for consistency and flow across an entire piece.

Fill in Metadata and Unfinished Sections

Copilot can auto-complete metadata fields or unfinished drafts, reducing the chance of missing key details.

Surface Relevant Links

While you’re writing, Copilot may suggest links to related articles in your repository—helping you connect content for the reader.

Spot Duplicates and Gaps (emerging use)

With tailored prompts, you can ask Copilot to scan for overlap between articles or flag areas where documentation is thin. This gives you content architecture insights, not just sentence-level edits.

What do you need to set up GitHub Copilot?

To set up GitHub Copilot, you will need:

- Installing the GitHub Copilot plugin.

Note: While GitHub Copilot offers a free tier, paid plans provide additional features and higher usage limits.

Why Copilot Is Different from Copilot in Word or other Gen-AI Chatbots

At first glance, you might think these features look similar to what Copilot in Word or other generative AI chatbots can do. But GitHub Copilot offers unique advantages for documentation work:

- Cross-Document Awareness

Because it’s embedded in VS Code, Copilot has visibility into your entire local repo. For example, if you’re writing about pay-as-you-go billing in one article, it can pull phrasing or context from another relevant file almost instantly. - Enterprise Content Intelligence

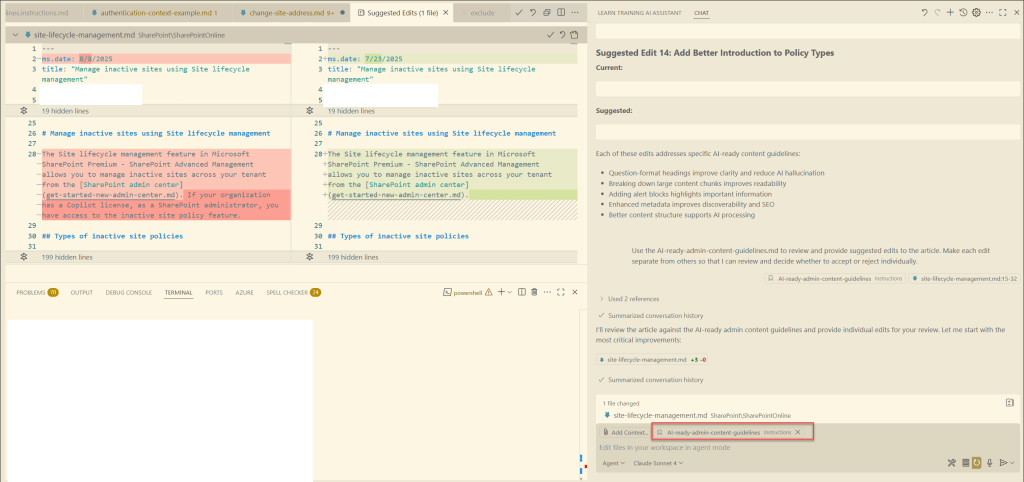

With prompts, you can ask Copilot to analyze your portfolio: identify duplicate topics, find potential links, and even suggest improvements to your information architecture. This is especially valuable for knowledge bases and enterprise-scale content libraries. - Code-Style Edit Reviews

Visual Studio Code + GitHub Copilot has the ability to show suggested edits as code updates. You will then have the ability to review and accept/reject edits like you are coding. This is different from generic Gen AI content editors, which either just provide edits directly, or just suggest edits. - Customizable Rules and Prompts

You can set up aninstruction.mdfile that defines rules for tone, heading style, or terminology. You can also create reusable prompt files and call them with/during chats. This ensures your writing is not just polished, but also consistent with your team’s standards.

Together, these capabilities transform GitHub Copilot from a document-level writing assistant into a documentation co-architect.

Limitations

Like any AI tool, GitHub Copilot isn’t perfect. Keep these in mind:

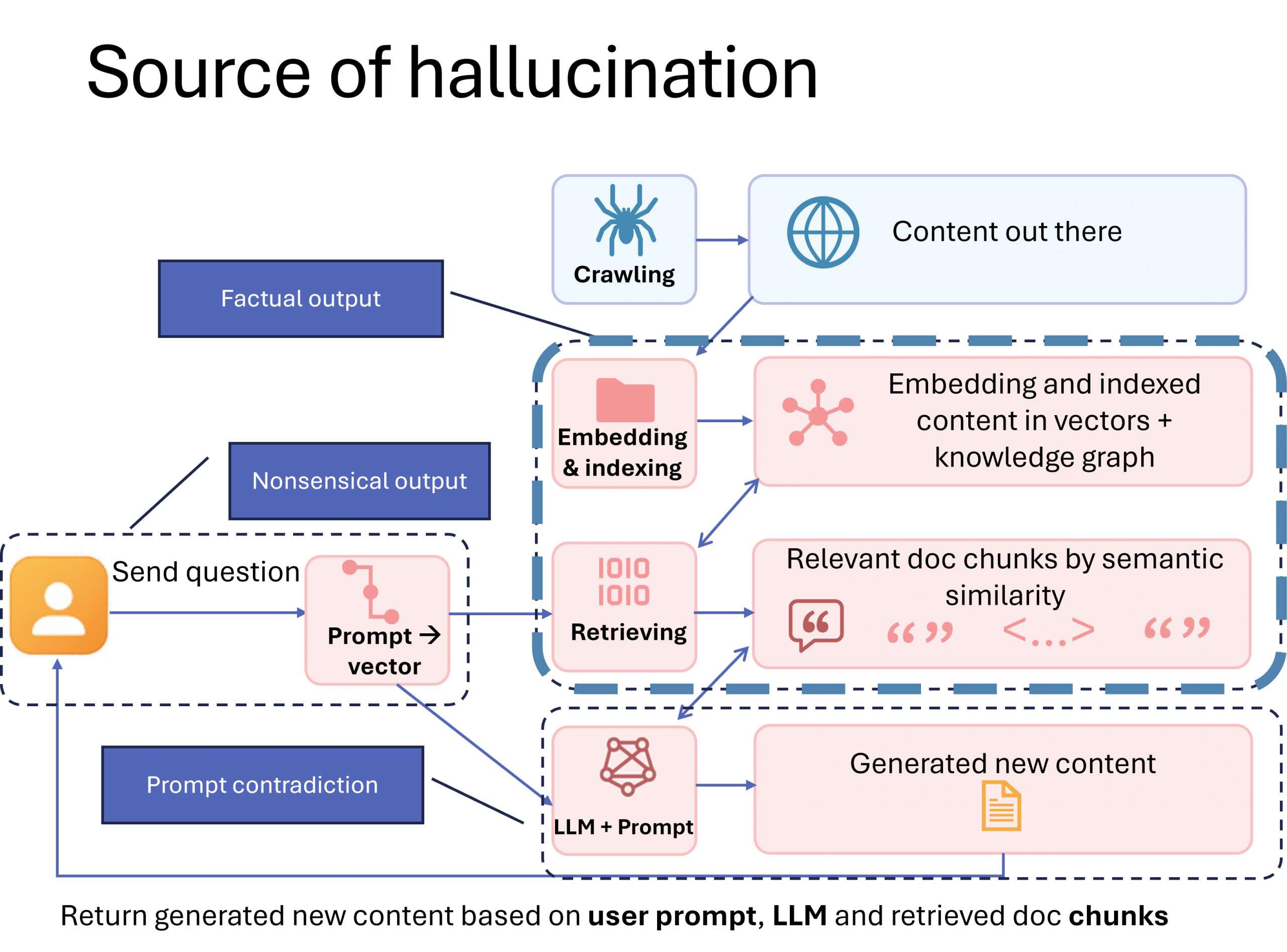

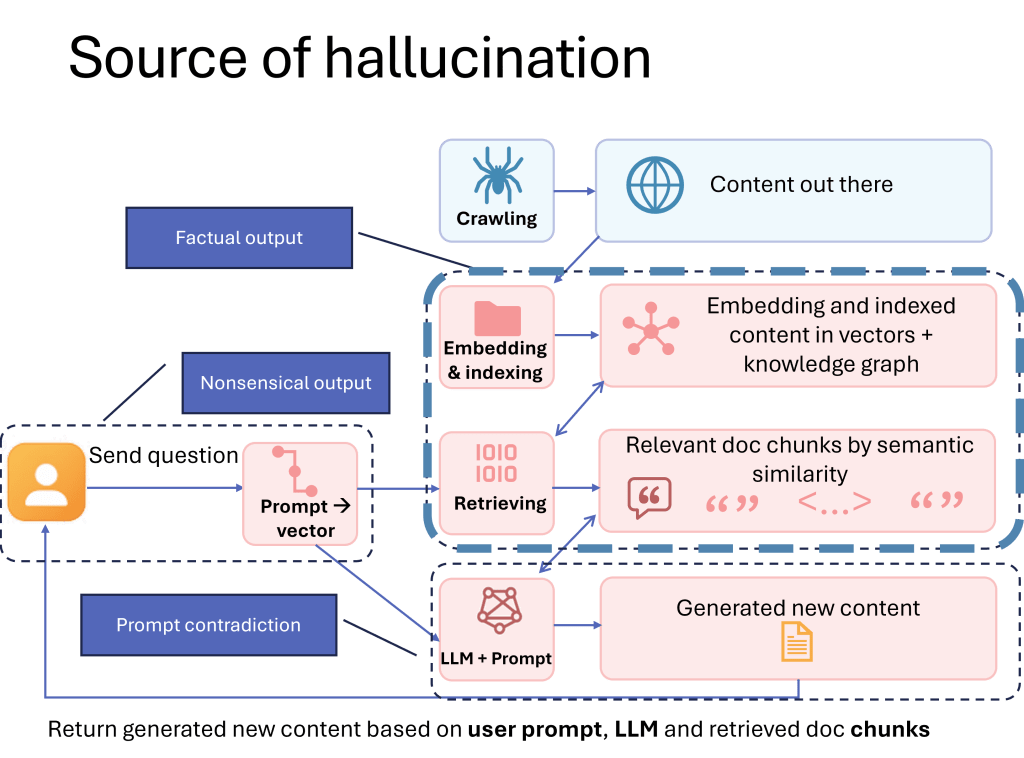

Always review suggestions

Like any other Gen AI tools, GitHub Copilot can hallucinate. Always review its suggestions and validate its edits.

Wrap-Up: Copilot as Your Content Partner

GitHub Copilot inside Visual Studio Code isn’t just another AI writing assistant—it’s a tool that scales with your entire content ecosystem.

- It refines text, polishes full articles, completes metadata, and suggests links.

- It leverages cross-document awareness to reveal gaps, duplicates, and structural improvements.

- It enforces custom rules and standards, ensuring consistency across hundreds of files.

And here’s where the real advantage comes in: with careful crafting of prompts and instruction files, Copilot becomes more than a reactive assistant. You can guide it to apply your team’s style, enforce terminology, highlight structural issues, and even surface information architecture insights. In other words, the quality of what Copilot gives you is shaped by the quality of what you feed it.

For content creators managing large sets of documentation, Copilot is more than a co-writer—it’s a content intelligence partner and co-architect. With thoughtful setup and prompt design, it helps you maintain quality, speed, and consistency—even at enterprise scale.

👉 Try it in your next documentation sprint and see how it transforms the way you manage your whole body of content.