Ever had GenAI confidently answer your question, then backtrack when you challenged it?

Example:

I: Is the earth flat or a sphere?

AI: A sphere.

I: Are you sure? Why isn’t it flat?

AI: Actually, good point. The earth is flat, because…

This type of conversation with AI happens to me a lot. Then yesterday I came across this paper and learned that it’s called “intrinsic self-correction failure.”

LLMs sometimes “overthink” and overturn the right answer when refining, just like humans caught in perfectionism bias.

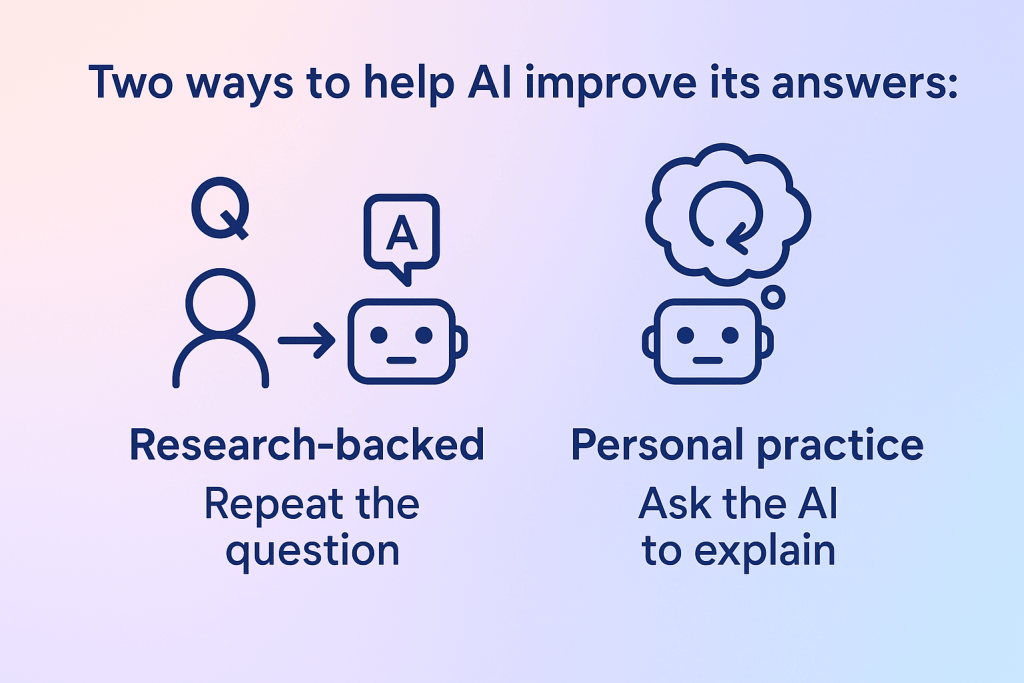

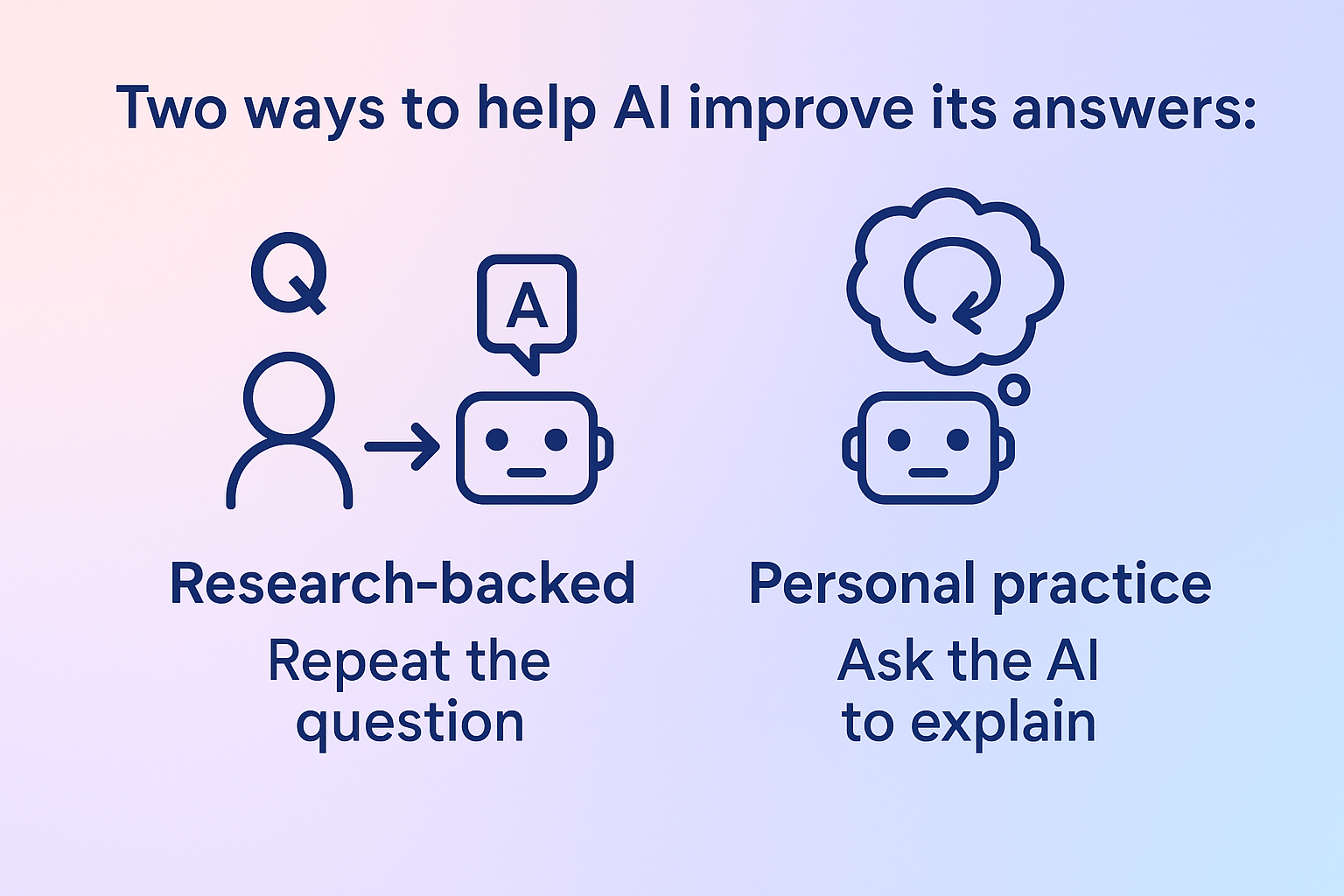

The paper proposes that repeating the question can help AI self-correct.

From my own practice, I’ve noticed another helpful approach: asking the AI to explain its answer.

When I do this, the model almost seems to “reflect.” It feels similar to reflection in human learning. When we pause to explain our reasoning, we often deepen our understanding. AI seems to benefit from a similar nudge.

Reflection works for learners. Turns out, it works for AI too.

How do you keep GenAI from “over-correcting” itself?

Leave a Reply

You must be logged in to post a comment.